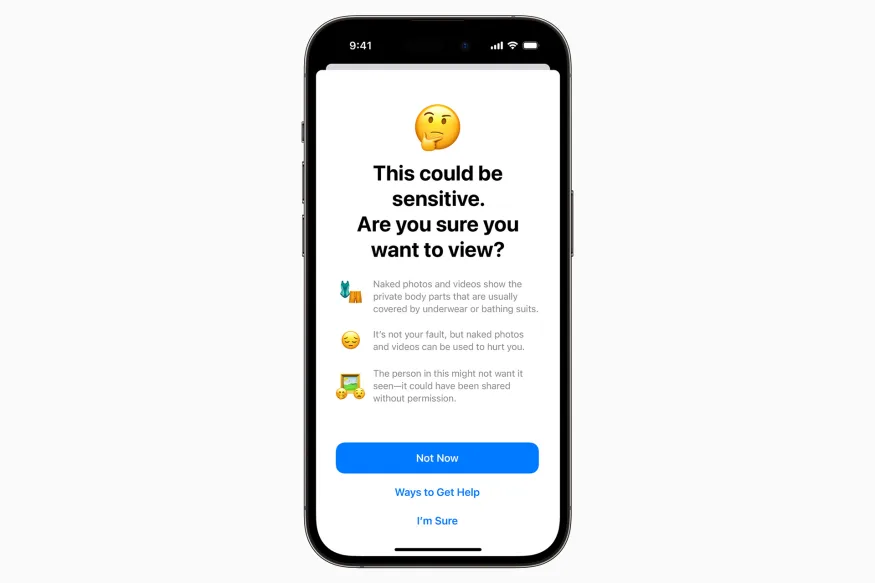

After the huge success that WWDC23 brought in, Apple’s iOS 17 increases security against unwanted iMessage attachments, which is both fascinating and impressive. To assist adults in avoiding unwanted nude images and films, the tech giant has announced that its next software would include a Sensitive Content Warning function that would totally improve our in-app experience. Apple has included additional protections in iOS 17 to prevent anything that would create enough discomfort to, let’s say, just ruin our days.

So practically, we’ll have the option of declining anything that could cause us to worry by just choosing between a few options. Easy as that!

What’s best is how the kids are protected from now on, thanks to Communication Safety. Sexually explicit content given or received via AirDrop, FaceTime chats, Contact Posters, or the Photos picker will be automatically detected and blurred using machine learning. Apple claims it is likewise unaware of the contents. If you want to keep your children’s accounts secure while they use the internet, you’ll need to turn on Family Sharing.

Just imagine how smooth the process will be! Furthermore, the tech has advanced to the point that it can now detect moving images, saving us from unpleasant moments. If upsetting material is received, kids can ask for aid from adults they know they can trust by just sending them a quick message. Quite impressive, isn’t it?!

Apple has announced plans to identify photographs uploaded to iCloud that include known child sexual abuse material (CSAM) in 2021, with initiatives to highly reduce unwanted nudes. But at the end of 2022, the tech giant based in Cupertino decided to abandon this strategy out of worries that governments may urge it to expand its picture scanning to include additional content.

Therefore, both Sensitive Content Warning and Communication Safety do media processing locally on the user’s device.

Leave a Reply