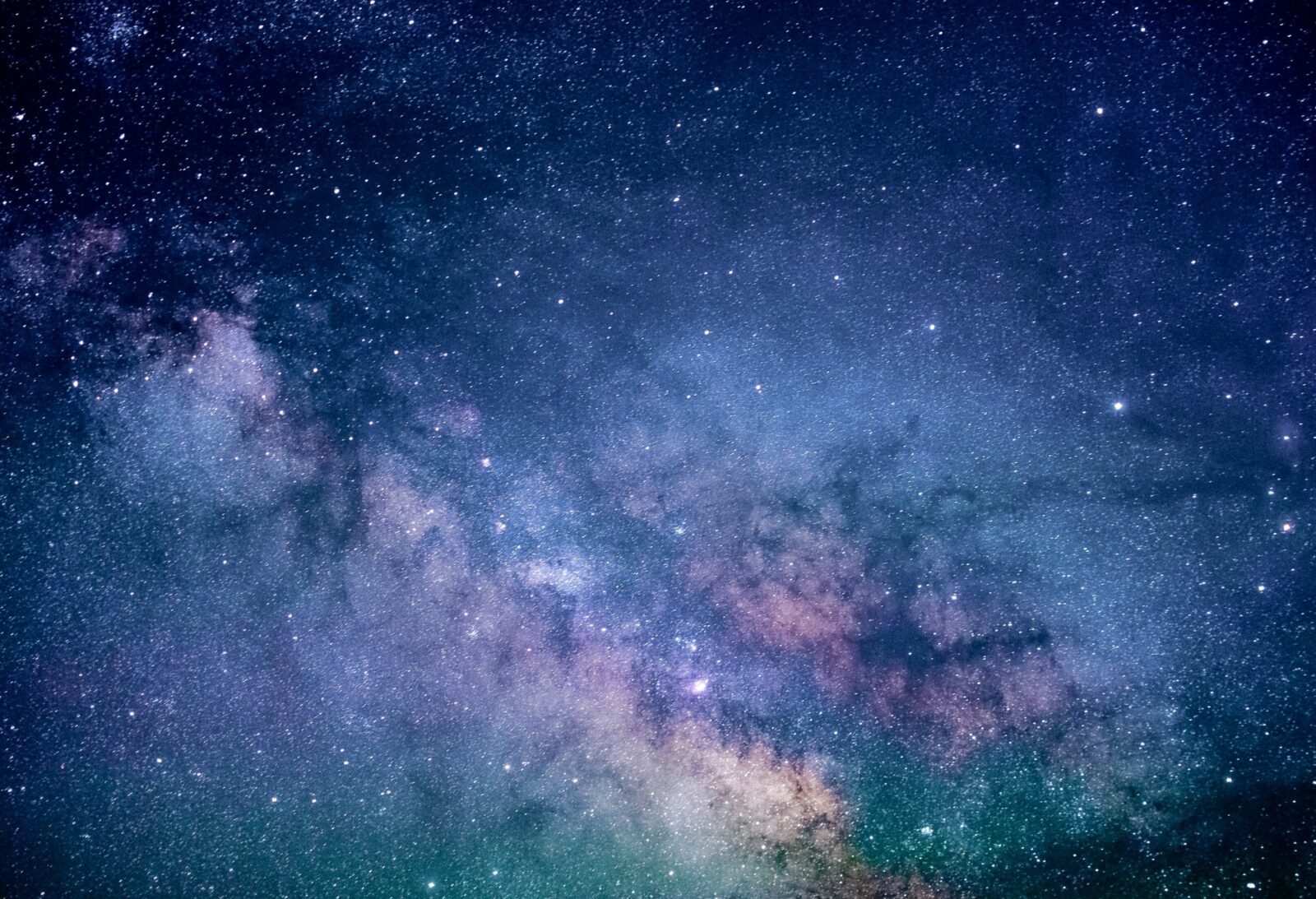

While Albert Einstein’s general theory of relativity does a good job of explaining planetary and stellar gravity, its applicability is less clear on smaller scales.

Gravity affects everything equally because everything has it. Despite being the most ubiquitous of the fundamental forces, understanding it has proven to be one of the greatest challenges facing modern physicists.

However, general relativity, which was developed by Albert Einstein, does not appear to apply perfectly on all scales, despite its remarkable success in describing the gravity of stars and planets. From Eddington’s 1919 measurement of the deflection of starlight by the Sun to the recent detection of gravitational waves, general relativity has stood the test of time in the laboratory of observation.

Our knowledge, however, begins to break down when we attempt to apply it to extremely small distances, where quantum mechanics governs, or when we attempt to describe the entire universe.

With the publication of a new study in Nature Astronomy, Einstein’s theory has finally been put to the test on the grandest of scales. Scientists hope their method will one day shed light on perplexing cosmological questions, and preliminary results suggest that general relativity may need to be adjusted to account for this scale.

According to quantum theory, even a vacuum contains a great deal of energy. For the reason that our technology can only detect fluctuations in energy, we are unable to detect its presence. But Einstein claims that vacuum energy has a repulsive gravity, causing empty space to be pushed apart.

As an interesting aside, it was found in 1998 that the expansion of the universe is, in fact, speeding up. However, quantum theory predicts an amount of vacuum energy, or dark energy, that is many orders of magnitude smaller than what is required to explain the acceleration.

For this reason, the “old cosmological constant problem” centers on the issue of whether or not vacuum energy gravitates, meaning whether or not it exerts a gravitational force and thus modifies the rate of expansion of the universe.

Then why does it have much weaker gravity than expected if that’s the case? Why is the universe speeding up if the vacuum has no gravitational pull? Since the expansion of the universe cannot be explained without the presence of dark energy, its existence is assumed. Dark matter, an invisible form of matter, must also be assumed to account for the observed properties of galaxies and clusters.

The standard cosmological theory, known as the lambda cold dark matter (LCDM) model, is predicated on these assumptions, which posit that there is 70% dark energy, 25% dark matter, and 5% ordinary matter in the universe. To top it all off, cosmologists have had astounding success in using this model to explain the data they’ve gathered over the past two decades.

In spite of this, many physicists have pondered whether or not Einstein’s theory of gravity needs to be modified to describe the entire universe, given that the vast majority of it is composed of dark forces and substances whose values don’t make sense.

A few years ago, however, a new wrinkle called the Hubble tension emerged: it was discovered that different methods of calculating the rate of cosmic expansion, or the Hubble constant, yield various results.

This tension arises from a discrepancy, or difference, between two different estimates of the Hubble constant. The LCDM cosmological model, constructed to fit the light from the Big Bang, predicts a value of one. The other is the rate of expansion estimated from the observation of supernovae in extremely distant galaxies. There are a variety of theoretical proposals for adjusting LCDM to account for the Hubble tension. Alternative theories of gravity are one example.

The study

Tests can be developed to see if the universe conforms to Einstein’s theory. According to general relativity, gravity warps the paths that light and matter take through space and time. Crucially, it suggests that gravity should have the same effect on the paths of light rays as it does on the paths of matter.

They collaborated with a group of cosmologists to put general relativity’s fundamental principles to the test. They also considered the possibility that tweaking Einstein’s theory could help solve the Hubble tension and other cosmological mysteries.

They set out to do the first ever simultaneous study of three aspects of general relativity to determine its validity on large scales. Those were the accelerating growth of the universe, the gravitational effects on light and matter, and the kinetic effects of gravity. Using these three variables, they rebuilt the evolution of the universe’s gravity in a computer model through using statistical technique of Bayesian inference.

The parameters could be estimated using the Planck satellite’s cosmic microwave background information, supernova catalogues, and the SDSS and DES telescopes’ findings of the forms and distributions of distant galaxies. The LCDM model’s prediction was then compared to our reconstruction, and some interesting, if weakly statistically significant, hints of a possible mismatch with Einstein’s prediction were found.

Nonetheless, this raises the possibility that gravity behaves differently on large scales, calling into question the completeness of general relativity. Our research also shows that modifying only the theory of gravity is not a viable option for resolving the Hubble tension.

A new component in the cosmological model, such as an early form of dark energy, primordial magnetic fields, or a variant of dark matter, would likely be needed to provide a complete solution, as it was not present when protons and electrons first combined to form hydrogen shortly after the Big Bang.

Or maybe there’s some sort of systematic error with the data that we don’t know about. However, our research has shown that general relativity can be verified at cosmological distances by utilizing observational data.

Leave a Reply